Damerau-Levenshtein Java methods updated

November 30th, 2009 | by Sean |I had a long-overdue review of the Damerau-Levenshtein Java methods I wrote, extended the regression tests that are included in the class, and discovered a few bugs still hiding in the code. I’ve pretty much re-written the methods so that they’re much easier to read, are much closer in structure to the pseudo-code posted at wikipedia, and also perform slightly better.

The function specifications have changed slightly, with an overloaded version that provides exactly the same interface and behaviour as before, at a slight performance penalty owing to two extra method invocations. The methods previously allocated their own workspace (an int array) for tracking the cost of edits, but now expect a pre-allocated workspace to be passed to them as a parameter. Overloaded methods having the old style of parameters now invoke a class method to allocate a new workspace, and pass the int array along with the supplied parameters to the new methods.

For one-off invocation of the methods, the change introduces a small performance hit. For repeated invocations, I suggest your calling code allocate the workspace, and reuse it in each call to the edit-distance methods:

int[] aResult = new int[candidateArray.length];

int[] workspace

= Levenshtein.getWorkspace(matchString.length()

, longestCandidate.length());

int i = 0;

for (String candidate : candidateArray)

candidateArray[i++]

= damlev(matchString, candidate, workspace);

From tests here, you should expect a 6-10% performance boost – using a pre-allocated workspace – on unlimited (whole strings are tested) invocations of the functions. If you’re using the limited (levlim, damlevlim) versions, the speedup can be enormous, depending on how poorly matched your candidate strings are. I haven’t tested with a ‘real-world’ data-set yet, but a worst-case ‘dumb test’ of two totally different strings gives average timings of down to 16 nanoseconds for the pre-allocated workspace, 4,500 for the locally allocated workspace. If your workload consists of very-closely matched strings, you’ll get a performance boost closer to the 6-10% above.

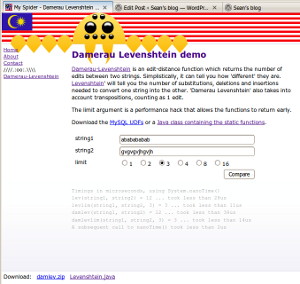

You can try out the methods at spider.my’s Damerau Levenshtein demo page, and download the code from there too.

The MySQL UDFs are probably badly in need of a code review, and I never did write a proper set of test cases for them, so they’re next. I’m not actually using them in any projects at the moment, so they’re not next in terms of priority. The first person to state a preference for recently-maintained MySQL UDFs get’s a free code review and regression tests.